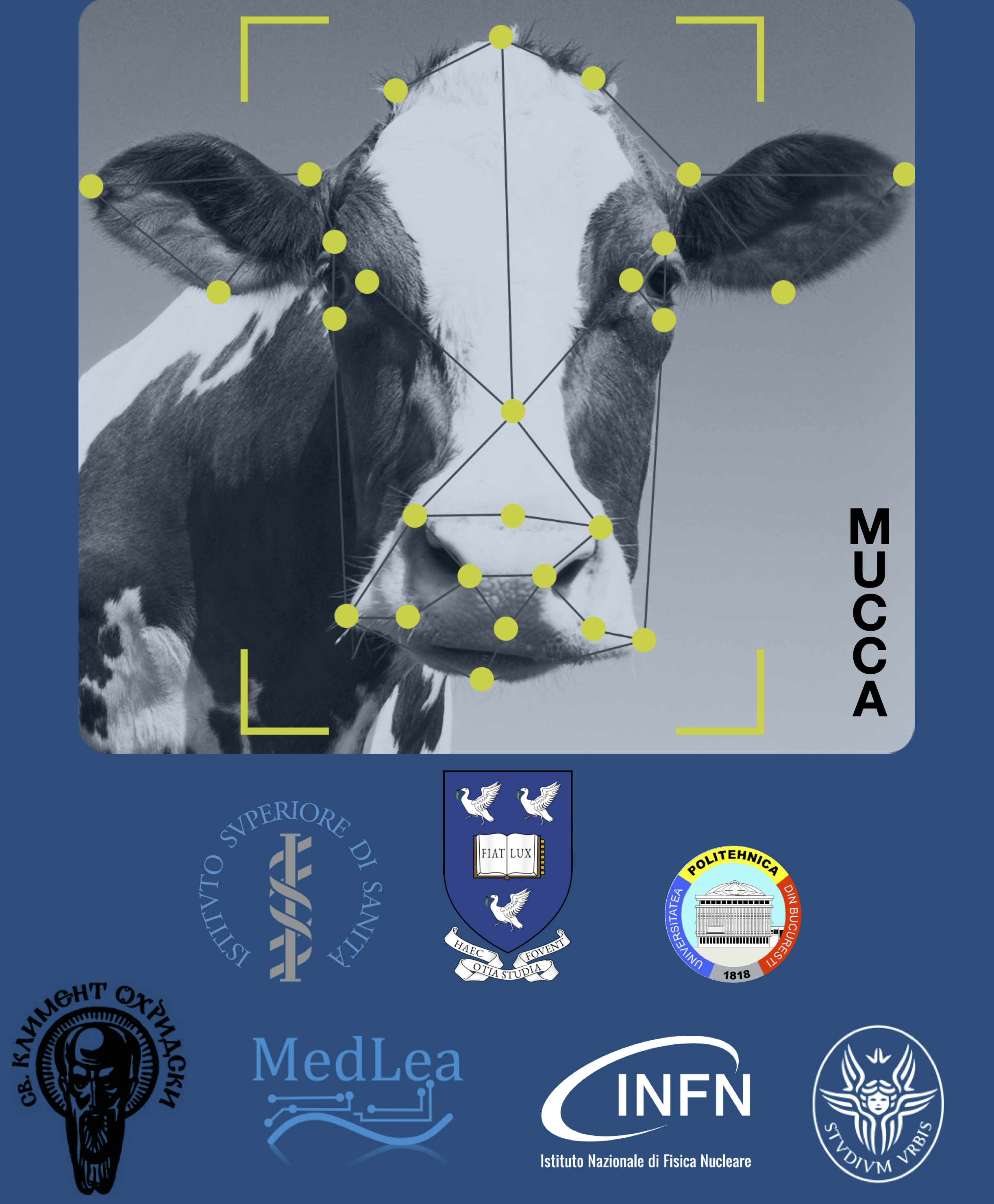

MUCCA

MUCCA - Multi-disciplinary Use Cases for Convergent new Approaches to AI explainability

ID Call: Call 2019 for Research Proposals - Explainable Machine Learning-based Artificial Intelligence

Funding programme: CHIST-ERA IV - European Coordinated Research on Long-term ICT and ICT-based Scientific and Technological Challenges

Sapienza's role in the project: Coordinator

Scientific supervisor for Sapienza: Stefano Giagu

Department: Physics

Project start date: February 1, 2021

Project end date: January 31, 2024

Project Abstract:

The development and testing of methodologies to interpret the predictions of AI algorithms in terms of transparency, interpretability, and explainability have become one of the most important open issues for Artificial Intelligence today. The MUCCA project brings together researchers from different fields with complementary skills essential for understanding AI algorithms' behaviour. These will be studied on a set of multidisciplinary use cases in which explainable AI can play a crucial role and will be used to quantify the strengths and highlight, and possibly resolve, the weaknesses of explainable AI methods available in different application contexts. The proposed use cases range from artificial intelligence applications for high-energy physics to AI applied to medical imaging, functional imaging, and neuroscience. For each use case, the research project is divided into three phases. In the first part, state-of-the-art explainability techniques are developed and applied, appropriately chosen according to the requirements of the different use cases. In the second part, the shortcomings of the different techniques will be identified, scalability problems with raw and high-dimensional data, where noise may be prevalent with respect to the signal of interest, will be studied, and the level of certifiability offered by each algorithm will be analysed. In the final phase, new algorithmic methodologies and application pipelines will be designed for HEP, medical and neuroscientific use cases.

The development and testing of methodologies to interpret the predictions of AI algorithms in terms of transparency, interpretability, and explainability have become one of the most important open issues for Artificial Intelligence today. The MUCCA project brings together researchers from different fields with complementary skills essential for understanding AI algorithms' behaviour. These will be studied on a set of multidisciplinary use cases in which explainable AI can play a crucial role and will be used to quantify the strengths and highlight, and possibly resolve, the weaknesses of explainable AI methods available in different application contexts. The proposed use cases range from artificial intelligence applications for high-energy physics to AI applied to medical imaging, functional imaging, and neuroscience. For each use case, the research project is divided into three phases. In the first part, state-of-the-art explainability techniques are developed and applied, appropriately chosen according to the requirements of the different use cases. In the second part, the shortcomings of the different techniques will be identified, scalability problems with raw and high-dimensional data, where noise may be prevalent with respect to the signal of interest, will be studied, and the level of certifiability offered by each algorithm will be analysed. In the final phase, new algorithmic methodologies and application pipelines will be designed for HEP, medical and neuroscientific use cases.

The operational structures involved are the Department of Physics (Applied Physics and High Energy Physics groups), the Department of Physiology and Pharmacology (the Behavioural Neuroscience group), and the DIET Department. The activities carried out in the project are related to the study and application of innovative methodologies to explain modern Artificial Intelligence algorithms (explainable AI or xAI) in different application contexts: high energy physics, real-time signal analysis, applied medical physics, and neuroscience. The proposed studies and research involve both theoretical studies and computational and experimental developments, including analysis of simulated and real data, construction of new AI models, and definition and implementation of general end-to-end procedures for the application of xAI methods in both research (basic and applied) and social and business contexts.